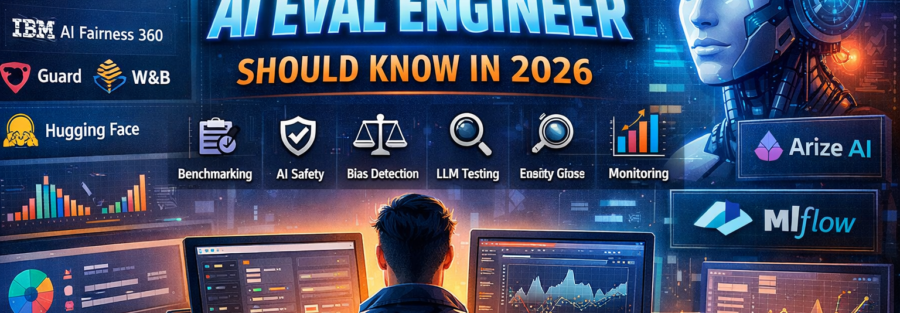

Tools Every AI Eval Engineer Should Know in 2026 (With Real Platforms)

AI evaluation in 2026 is no longer theoretical. It requires hands-on experience with specialized platforms built for benchmarking, monitoring, hallucination detection, bias analysis, safety testing, and production observability.

Modern AI systems are no longer single models. They include:

- Large Language Models (LLMs)

• RAG pipelines

• AI agents

• Multi-step reasoning workflows

• Distributed inference systems

Because of this complexity, AI evaluation tools now fall into six major categories:

- Testing

- Evaluation Frameworks & Experimentation

- Observability

- Production Monitoring

- Bias & Responsible AI

- Safety & Adversarial Testing

Let’s break down the real platforms dominating each category.

1. Testing Tools for LLM & AI Systems

Testing generative AI is fundamentally different from traditional software testing. Outputs are probabilistic, not deterministic. You measure quality, not just correctness.

OpenAI Evals

An open-source benchmarking framework for large language models.

Why it matters:

- Create custom evaluation datasets

- Run automated regression tests

- Compare different model versions

- Detect hallucinations and instruction failures

It is widely used for structured benchmarking of GPT-style models.

DeepEval

A dedicated LLM evaluation framework designed for automated quality scoring.

Key strengths:

- Faithfulness scoring

- Answer relevance evaluation

- Custom evaluation metrics

- Automated test case execution

DeepEval helps engineers treat LLM outputs like unit-testable components.

Ragas

Purpose-built for evaluating RAG (Retrieval-Augmented Generation) systems.

Core metrics include:

- Context precision

• Context recall

• Faithfulness

• Answer correctness

If you’re building search-powered AI applications, Ragas is essential.

2. AI Evaluation Frameworks & Experiment Platforms

Testing outputs is not enough. AI teams need structured experiment tracking, dataset management, and version comparison.

LangSmith

Built for LLM applications and AI agents.

Key features:

- Prompt version tracking

- Trace-level debugging

- Dataset-driven evaluation

- Agent workflow inspection

Critical for teams building multi-step chains and AI agents.

Braintrust

A modern experimentation and evaluation platform.

Why it stands out:

- Evaluation dataset management

- Model comparison dashboards

- Human-in-the-loop + automated scoring

- Prompt iteration tracking

Braintrust enables structured, scalable evaluation pipelines.

Weights & Biases

Originally built for ML experiment tracking, now heavily used for LLM evaluation.

Used for:

- Experiment tracking

- Model comparison dashboards

- Metric visualization

- Integration with PyTorch, TensorFlow, Hugging Face

Ensures reproducibility and structured experimentation.

MLflow

A lifecycle management platform for ML systems.

Tracks:

- Model versions

- Parameters

- Evaluation metrics

- Deployment stages

Essential for CI/CD-driven AI workflows.

3. Observability for AI Pipelines

Observability answers the most important debugging question:

Why did the model behave this way?

Modern AI systems are distributed across APIs, vector databases, embeddings, and inference endpoints.

OpenTelemetry

A standardized tracing and metrics framework.

Why it matters:

- Distributed tracing

- Latency tracking

- Infrastructure visibility

- Integration with Grafana, Datadog, and cloud stacks

OTEL connects complex AI pipelines into a single trace.

Hugging Face + Open LLM Leaderboards

Provides:

- Standard benchmark datasets

- Model comparison leaderboards

- Evaluation pipelines

AI Eval Engineers use it for:

- MMLU benchmarking

- Multilingual testing

- Reasoning evaluation

4. Production Monitoring & Reliability

Monitoring ensures models behave correctly after deployment.

LLM failures are subtle:

- Hallucinations

- Drift

- Bias

- Unsafe outputs

Arize AI

A leading AI observability platform.

Tracks:

- Output drift

- Embedding drift

- Hallucination rates

- Performance degradation

Critical for large-scale production AI systems.

Galileo

Specializes in LLM evaluation and hallucination detection.

Focus areas:

- Root cause analysis

- Prompt debugging

- Retrieval evaluation

- Hallucination detection

Especially powerful for RAG systems.

WhyLabs

Focused on:

- Data drift detection

- Anomaly detection

- AI system reliability

Useful for maintaining stable LLM pipelines.

5. Bias, Fairness & Responsible AI

AI evaluation must include fairness and compliance checks, especially in regulated industries.

AI Fairness 360

A comprehensive fairness toolkit.

Helps:

- Detect demographic bias

- Apply mitigation algorithms

- Generate fairness reports

Essential in healthcare, finance, and government applications.

Responsible AI Toolbox

Evaluates:

- Fairness

- Explainability

- Error analysis

- Causal insights

Strong for enterprise-grade AI systems.

6. AI Safety & Adversarial Testing

Public-facing AI systems must be tested against attacks.

Lakera (Lakera Guard)

Focus areas:

- Prompt injection detection

- Jailbreak resistance

- Data leakage prevention

Highly relevant for AI products exposed to users.

Anthropic Safety Evaluations

Anthropic publishes structured alignment and safety methodologies used for:

- Harmful content detection

• Model alignment evaluation

• Policy compliance testing

These approaches influence how modern AI safety pipelines are designed.

The Modern AI Eval Stack in 2026

A real-world AI evaluation stack typically looks like this:

Testing

→ OpenAI Evals, DeepEval, Ragas

Frameworks & Experimentation

→ LangSmith, Braintrust, Weights & Biases, MLflow

Observability

→ OpenTelemetry, Hugging Face Benchmarks

Monitoring

→ Arize AI, Galileo, WhyLabs

Bias & Responsible AI

→ AI Fairness 360, Responsible AI Toolbox

Safety & Adversarial Testing

→ Lakera Guard, Anthropic Safety Evaluations

Final Thoughts

In 2026, AI evaluation is not just about accuracy.

It is about:

- Reliability

- Safety

- Bias detection

- Hallucination monitoring

- Real-world robustness

- Continuous production tracking

AI evaluation is now:

- Continuous

- Automated

- Integrated into CI/CD

- Safety-aware

- Observability-driven

Tools like OpenAI Evals, LangSmith, Arize AI, Braintrust, DeepEval, Ragas, and OpenTelemetry have become core infrastructure for serious AI teams.

If you want to become a successful AI Eval Engineer, mastering these platforms is just as important as understanding machine learning theory.

Because in 2026:

Building AI is easy.

Evaluating it correctly is what makes you valuable.