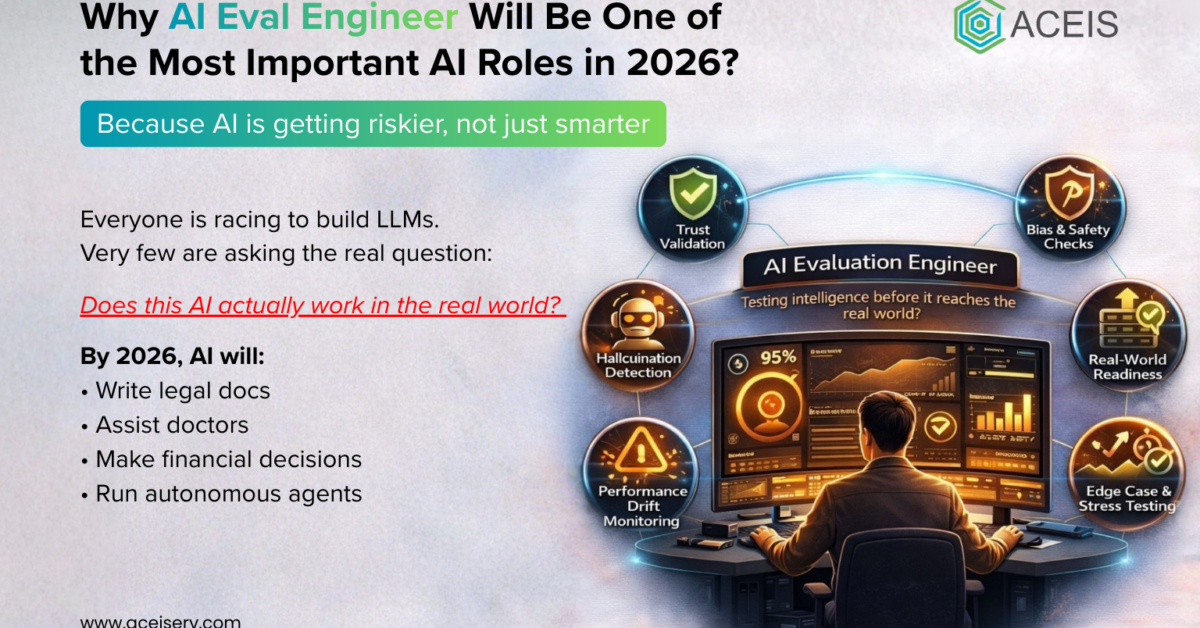

Why AI Eval Engineer Will Be One of the Most Important AI Roles in 2026?

Artificial Intelligence is no longer just about building models. In 2026, the real competitive edge won’t come from who trains the biggest AI, but from who can prove that AI actually works, behaves safely, and delivers value.

That’s exactly why AI Evaluation Engineer (AI Eval Engineer) is emerging as one of the most critical AI roles of 2026.

As companies race to deploy large language models (LLMs), autonomous agents, and generative AI systems into real products, one uncomfortable truth has become clear:

Most AI systems fail silently.

And that’s where AI Eval Engineers step in.

What Is an AI Eval Engineer?

An AI Eval Engineer designs, runs, and maintains evaluation frameworks that measure how well AI systems perform in the real world.

Unlike traditional ML engineers who focus on training models, AI Eval Engineers focus on questions like:

- Is the AI actually correct, not just confident?

- Is it safe, unbiased, and reliable at scale?

- Does it generalize across edge cases and real user behavior?

- Is performance improving, or quietly degrading?

In short, they answer the hardest question in AI:

“Can we trust this model?”

Why AI Evaluation Is Becoming Mission-Critical in 2026

1. LLMs Are Moving Into High-Risk Domains

By 2026, AI systems are deeply embedded in:

- Healthcare diagnostics

- Legal research and contract analysis

- Financial decision-making

- Customer support automation

- Autonomous agents and copilots

In these environments, hallucinations, bias, or small logic errors are not acceptable.

AI Eval Engineers ensure models are:

- Auditable

- Measurable

- Aligned with real-world outcomes

This is why AI evaluation is shifting from “nice to have” to non-negotiable.

2. Accuracy Metrics Alone Are No Longer Enough

Traditional metrics like accuracy, BLEU, or ROUGE are insufficient for modern AI systems.

Today’s AI needs evaluation across:

- Factual correctness

- Reasoning quality

- Robustness to adversarial prompts

- Toxicity and bias

- Instruction-following

- Long-context behavior

- Tool-use reliability

AI Eval Engineers build custom evaluation pipelines, often combining:

- Automated benchmarks

- Human-in-the-loop evaluation

- Synthetic test data

- Model-based graders

This is a completely new engineering discipline.

3. AI Is Becoming Continuous, Not Static

In 2026, AI systems:

- Update weekly (or daily)

- Learn from user feedback

- Interact with other AI agents

- Change behavior over time

That means evaluation can’t be a one-time task.

AI Eval Engineers design continuous evaluation systems that:

- Detect performance regressions

- Compare model versions (A/B testing for AI)

- Monitor drift in production

- Flag unexpected behavior early

Think of them as quality engineers for intelligence itself.

Why Companies Are Actively Hiring AI Eval Engineers

Big tech and AI-first startups already know this:

Shipping AI without evaluation is a legal, ethical, and financial risk.

In 2026, companies are hiring AI Eval Engineers because they:

- Reduce costly AI failures

- Support AI governance and compliance

- Improve user trust and adoption

- Enable safer AI deployments at scale

- Make AI teams faster and more confident

Roles with titles like:

- AI Evaluation Engineer

- LLM Evaluation Engineer

- AI Quality Engineer

- Responsible AI Engineer

…are becoming mainstream across startups, enterprises, and research labs.

Skills That Make AI Eval Engineers So Valuable

AI Eval Engineers sit at the intersection of engineering, data science, and human judgment.

Tools Required for an AI Eval Engineer

1. Testing & Automation Tools

These support strong software testing fundamentals and automation.

- Pytest – test case design, execution, reporting

- Selenium – UI and workflow automation

- Robot Framework – keyword-driven test automation

- Playwright – modern web testing (often preferred over Selenium)

- Postman / Newman – API testing for AI services

- Allure / TestRail – test reporting and dashboards

3. LLM & Generative AI Evaluation Frameworks

Directly aligned with AI evaluation responsibilities.

- Arize Phoenix – tracing, observability, evals

- Braintrust – experiment tracking and prompt evaluation

- DeepEval – LLM quality, hallucination, relevance testing

- LangSmith – prompt tracing, debugging, and evals

Ragas – RAG evaluation (context relevance, faithfulness, recall)

Key skills include:

🔹 Technical Skills

- Python and data pipelines

- LLM APIs (OpenAI, Anthropic, open-source models)

- Prompt engineering and prompt testing

- Statistical analysis

- Experiment design

- Observability tools for AI systems

🔹 Evaluation & Reasoning Skills

- Designing meaningful benchmarks

- Creating edge-case test suites

- Error analysis and failure categorization

- Understanding model reasoning limits

🔹 Product & Ethics Awareness

- User-centric evaluation

- Bias and fairness testing

- Safety and alignment metrics

- Regulatory and compliance awareness

This blend is rare, and that’s why the role pays well.

AI Eval Engineer vs ML Engineer: What’s the Difference?

ML Engineer | AI Eval Engineer |

Trains models | Tests and validates models |

Optimizes loss functions | Defines success metrics |

Focuses on performance | Focuses on reliability & trust |

Builds models | Stress-tests intelligence |

In 2026, great AI teams need both.

Why This Role Will Matter Even More in the Future

As AI systems become:

- More autonomous

- More human-facing

- More influential in decision-making

…the cost of not evaluating them skyrockets.

Regulators, enterprises, and users will increasingly demand:

- Transparent AI behavior

- Measurable AI performance

- Clear accountability

AI Eval Engineers will be the people who make that possible.

Final Thoughts

The AI gold rush isn’t just about building smarter models anymore.

It’s about building trustworthy intelligence.

In 2026, the most important AI professionals won’t just ask:

“Can we build it?”

They’ll ask:

“Does it work safely, reliably, and for the right reasons?”

That’s why AI Eval Engineer is not just a trending job title, it’s one of the most important AI roles of the decade.